Meta’s handling of ‘SpongeBob’ Holocaust denial post is taken up by independent Oversight Board

Users had complained about the post both before and after Meta enacted a ban on Holocaust denial in October 2020, but the company rejected their criticism

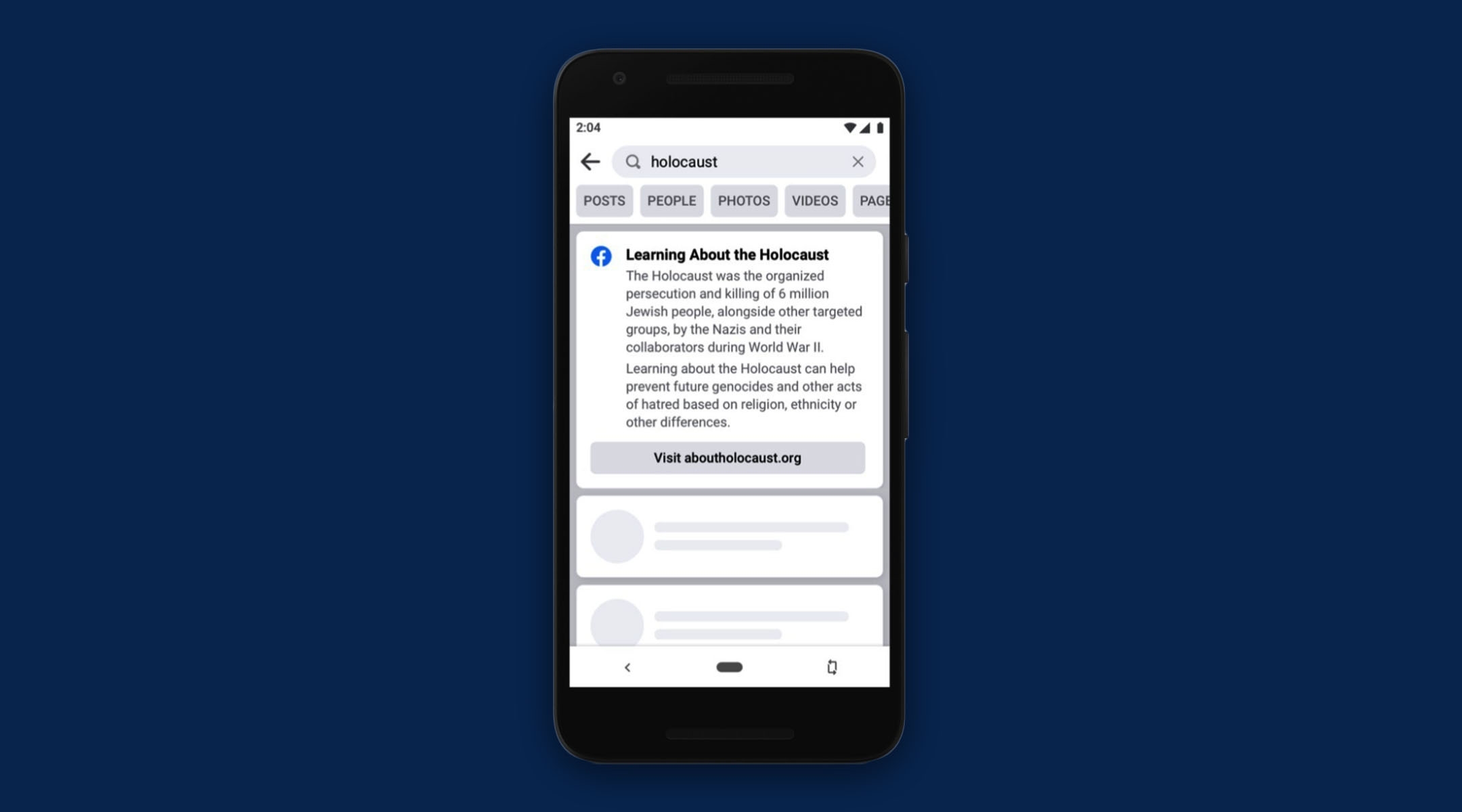

A view of the notification Facebook announced in 2021 that it would use to combat Holocaust denial. (Facebook)

(JTA) — Meta’s independent Oversight Board is scrutinizing a post featuring a “SpongeBob SquarePants” character, in what could be a landmark case for how its platforms Facebook and Instagram moderate Holocaust denial.

The board, which issues non-binding rulings on the company’s moderation decisions, announced on Thursday that it has taken up a case involving the company’s response to complaints about a meme posted in September 2020.

In the meme, the character Squidward appears next to a speech bubble with neo-Nazi talking points disputing the Holocaust, under the title “Fun Facts About The Holocaust.” The anonymous user behind the post, who has about 9,000 followers, appears to have borrowed a popular meme template known as “Fun Facts With Squidward.” The post falsely stated that the Holocaust couldn’t have happened because the infrastructure for carrying out the genocide was not built until after World War II, among other lies and distortions.

Users complained about the post both before and after the company enacted a ban on Holocaust denial in October 2020, the month after the post went up. But Meta had rejected all of their complaints, saying that the post did not violate community standards on hate speech.

Meta responds

In a statement responding to the Oversight Board’s announcement, the company said it had erred and has now removed the post, which had been viewed by about 1,000 people. Fewer than 1,000 had “liked” it, the company said.

The Oversight Board will investigate why the post stayed up for so long. Its decision to take up the case means the board attaches significance to the criticism that existing rules and practices do not adequately address the wide range of forms in which antisemitism and Holocaust denial appear on Meta’s platforms.

To start, the board has asked for public comment about a number of issues related to the company’s responsibility for the content appearing on its platforms. These larger issues involve research into what harm results from online Holocaust denial; how Meta’s responsibilities relate to questions of dignity, security and freedom of expression; and the use of automation in reviewing content for hate speech.

Policing the platform

Social media platforms have come under fire and faced boycotts accusing them of facilitating the spread of antisemitism, racism and extremism for years, with many Jewish groups lobbying for stricter controls on online speech. In 2018, Meta CEO Mark Zuckerberg seemed to defend the right of users to post content featuring Holocaust denial on free speech grounds, but the company reversed course in 2020 and implemented a ban — and a new challenge soon emerged. The platform’s automated algorithms sometimes flagged educational materials about the Holocaust and removed them for violating community standards.

Automated reviews played a role in keeping the Squidward post online. Users complained about it six times — four times before the Holocaust denial ban went into place and twice after. According to the Oversight Board, Meta’s automated review process rejected some of the complaints, ruling that the post was compliant with its policies, while other complaints were shot down by a new pandemic-related policy that screened for “high risk” cases only. Both humans and algorithms were involved in the decisions, the board said.

This article originally appeared on JTA.org.

A message from our Publisher & CEO Rachel Fishman Feddersen

I hope you appreciated this article. Before you go, I’d like to ask you to please support the Forward’s award-winning, nonprofit journalism so that we can be prepared for whatever news 2025 brings.

At a time when other newsrooms are closing or cutting back, the Forward has removed its paywall and invested additional resources to report on the ground from Israel and around the U.S. on the impact of the war, rising antisemitism and polarized discourse.

Readers like you make it all possible. Support our work by becoming a Forward Member and connect with our journalism and your community.

— Rachel Fishman Feddersen, Publisher and CEO