This Supreme Court case might allow Nazis to operate freely on social media

Texas and Florida passed laws curtailing social media sites’ ability to moderate. Will the Supreme Court strike them down?

The director of NetChoice speaks outside the U.S. Supreme Court after the court heard the case on social media moderation. Courtesy of Andrew Caballero-Reynolds/AFP via Getty Images

The Supreme Court heard arguments on whether or not social media sites have to allow white supremacists to post with impunity Monday.

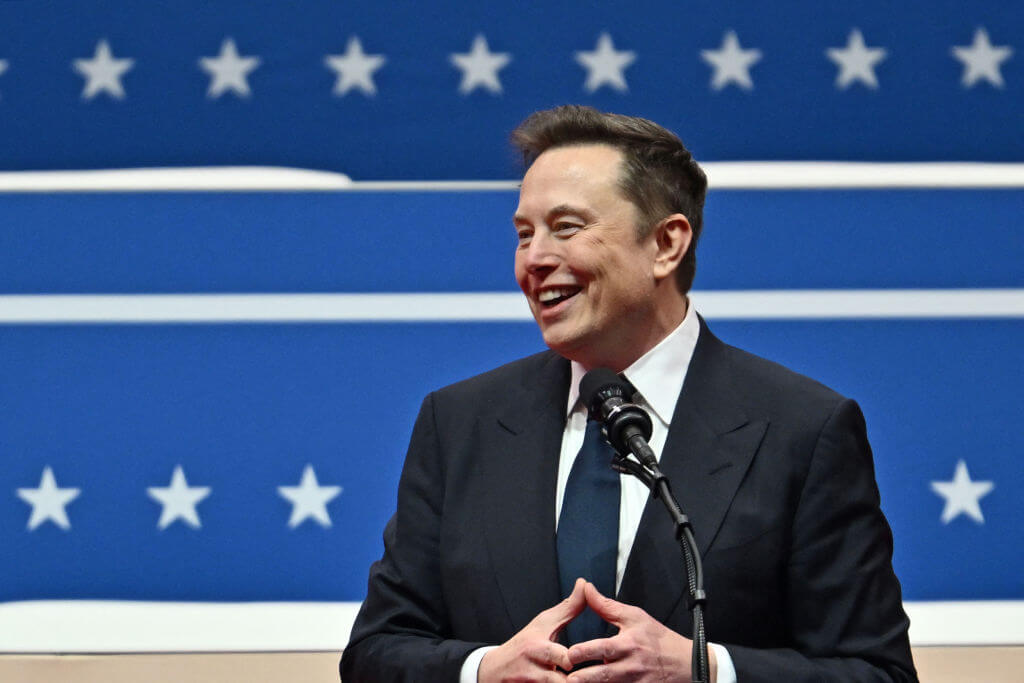

OK, it’s more complicated than that. But the end result could boil down to letting antisemitism and other forms of hate speech run rampant on platforms like Instagram and X, formerly known as Twitter. (Admittedly, since Elon Musk took over, that has already been fairly true on X.)

The two cases in question, Moody v. NetChoice and NetChoice v. Paxton, concern laws passed in Texas and Florida in 2021 that limit the ability of social media sites to remove and moderate content. If enforced, the laws would nearly eliminate the ability for platforms to control the spread of antisemitism or conspiracy theories on their sites, as well as other harmful content such as pro-anorexia or pro-suicide posts.

NetChoice — a tech industry association that represents numerous companies including Meta, TikTok, and Google — has responded that these laws unconstitutionally limit the platforms’ freedom of expression. In amicus briefs, the platforms have also pointed out that the application of the laws would make their platforms nearly unusable, due to the proliferation of hate speech.

(We know this because, well, it’s already happened. On sites like Gab, which advertise themselves as free speech absolutists, hate speech runs rampant.)

The two laws are written differently, and they’d have somewhat different applications.

The Texas law prevents sites from removing content based on its “viewpoint,” requiring moderation to be “viewpoint neutral.” It also limits the reasons and ways a platform might moderate, or even allow its users to control their own feeds.

The Florida law is far more sweeping. It includes fines for banning a candidate for office from any platform, and forbids censoring or even attaching warnings to content from a broadly defined “journalistic enterprise.” (Under the Florida statute, a journalistic enterprise includes any company that has published a large volume of content — written, video or audio — to at least 100,000 followers, or 50,000 paying subscribers.)

How did this case end up at the Supreme Court?

While the Texas and Florida laws are couched in neutral language about allowing all viewpoints, legislators specifically intended them to protect conservative speech.

Florida’s legislature passed its law after major social media platforms temporarily banned Donald Trump for inciting violence during the Jan. 6 riots. When he signed it into law, Governor Ron DeSantis said in a statement that the law protected Floridians from “the Silicon Valley elites.”

Even more explicitly, Texas governor Greg Abbott said the Texas law makes sure that “conservative viewpoints cannot be banned on social media.”

The social media companies, for their part — along with many amicus briefs, including one from the American Jewish Committee — argued that without moderation, our feeds would become a cesspool of neo-Nazis and disinformation, making them nearly unusable.

More broadly, the social media companies also want to defend themselves against government oversight of any kind. Generally, protecting private companies from regulation has been a conservative cause, while pressing for more regulation is a liberal priority. These cases invert those party lines, complicating the political stakes.

What’s the basic argument?

Tech companies who want to moderate their sites as they see fit argue that they have the same rights as a newspaper. Despite serving as something akin to a public square for discourse, newspapers have the freedom to decide what to print or not to print. The companies also argue that while the First Amendment prevents the government from censoring speech, it allows private companies to regulate the speech of their users.

“There are things that if the government does, it’s a First Amendment problem, and if a private speaker does it, we recognize that as protected activity,” said Paul Clement, the NetChoice lawyer, during his argument in front of the court.

Amicus briefs in support of the social media platforms also argue that social media users have a First Amendment right to be free from “compelled listening” — essentially, they shouldn’t have to sift through content they do not want to see or read.

But Texas attorney general Ken Paxton argued that the social media sites constitute “the world’s largest telecommunications platforms,” comparing them to phone companies, which are not allowed to limit people’s topics of discussion or restrict service based on political viewpoints. This type of service is known as a “common carriage” — basically, a resource everyone needs, and which providers cannot withhold. (For example, trains must allow all passengers to board, though there are exceptions for drunk and disorderly people.)

The states’ cases also involve a law called Section 230, which reduces the liability platforms have for what their users publish, and protects the ability of platforms to moderate as they see fit.

Do these laws really allow Nazis to operate on social media?

It’s complicated — in part because the laws in question are written somewhat sloppily, leaving a lot of open questions. (In fact, one of the arguments against them is that they would inundate the social media companies in frivolous lawsuits.)

Both laws include some attempt to carve out acceptable moderation, specifying that platforms can limit access to content that includes harassment, as well as pornographic or graphically violent content. But neither clearly defines what constitutes acceptable moderation.

Daphne Keller, who directs the Program on Platform Regulation at Stanford’s Cyber Policy Center, wrote in a blog post that the requirement for a “neutral viewpoint” would essentially force platforms “to decide what topics users can talk about.”

Keller explained that Paxton’s brief in support of the Texas law allows platforms to moderate categories, such as pornography, but not more “granular” distinctions. To prevent antisemitism, for example, platforms might have to bar all discussions of Jews — or even all discussions of race — to ensure that the moderation was totally neutral. Keller hypothesized that platforms “might try to limit posts to ‘neutral’ or ‘factual’ messages;” this could “get ugly,” she noted, when it comes to questions about, say, whether statements about gender constitute facts. Proving that potential hate speech — for example, memes about the alleged evils contained in the Talmud — is not factual would impose a fact-checking burden social media companies are not prepared to handle.

And if neutrality means either allowing or banning everything within a category, that raises new questions about how to define the category. For example, the Florida law allows platforms to remove content promoting terrorism, as long as that standard is applied equally: The law gives the example of permitting a platform to remove content about ISIS, as long as it also removes posts about al-Qaida. But what happens to organizations not universally acknowledged as terrorist groups? Keller brought up the question of how to classify the Proud Boys, and asked whether relying on the ADL’s glossary of hate groups would qualify as non-neutral.

What happened in court — and what’s next?

During Monday’s arguments, as the lawyers presented their arguments, justices posed a lot of hypothetical questions, trying to relate the cases to the various precedents each side relied upon.

The court seemed to display skepticism of both sides’ arguments. Justice Samuel Alito asked, if YouTube were truly a newspaper, how much the site weighs. Other justices wondered whether these laws would also apply to other sites, such as Uber or Etsy. (These sites use algorithmic ranking that might count as content bias under the laws — but of course, which set of ceramics Etsy prioritizes in your feed has no impact on free political debate.)

Most relevant to the question of a Nazi-filled internet, the states’ lawyers did not define the difference between “important” speech and “vile” speech. This, as Keller noted in a follow-up blog post, leaves the door open for “literal Nazis.” But, she said, “if the statutes did differentiate between important and ‘vile’ speech, they would have even bigger First Amendment problems than they do now.”

The Supreme Court is expected to deliver its decision in the spring, and it’s very possible that the justices may simply refer the case back to lower courts to determine exactly how the laws apply to sites like Uber or Etsy before they attempt to debate its First Amendment elements.

If they do deliver a more conclusive decision, many onlookers expect the social media sites to win outright. But Keller noted that there were more possibilities, including a partial win for the states — for example, justices might strike down the “viewpoint neutrality” rules but leave other parts of the laws intact. If the court rules that the sites are common carriers, they may be subject to more government regulation than has previously been legal.

And if the states win outright, we can look forward to neo-Nazi filled internet — but in fact, we already have one. I guess we’ll see how much worse it can get.

Correction: A previous version of this story incorrectly listed a member group of NetChoice. Reddit is not part of NetChoice, but did file an amicus brief in the case.

A message from our Publisher & CEO Rachel Fishman Feddersen

I hope you appreciated this article. Before you go, I’d like to ask you to please support the Forward’s award-winning, nonprofit journalism so that we can be prepared for whatever news 2025 brings.

At a time when other newsrooms are closing or cutting back, the Forward has removed its paywall and invested additional resources to report on the ground from Israel and around the U.S. on the impact of the war, rising antisemitism and polarized discourse.

Readers like you make it all possible. Support our work by becoming a Forward Member and connect with our journalism and your community.

— Rachel Fishman Feddersen, Publisher and CEO